Needs Organization Admin Role

Required for cloud-hosted data stores

This action is required only for registering a cloud-hosted data store like AWS S3, Google Cloud Storage, or Azure Blob Store

On the Containers page, click the + Add Container button to create a container.

.jpg)

Fill in the required information:

- Name - An identifier for the container.

- Data Store Name: Enter the name of the data store. A data store represents the data storage bucket like an S3 bucket.

- Select Store Type: Select among the available store types of S3, Azure Hot, and Google Cloud Store. Based on the store type selected, the form will update to show relevant fields for the specific store type, and the details of the fields are provided below.

- Click 'Save' to create the container.

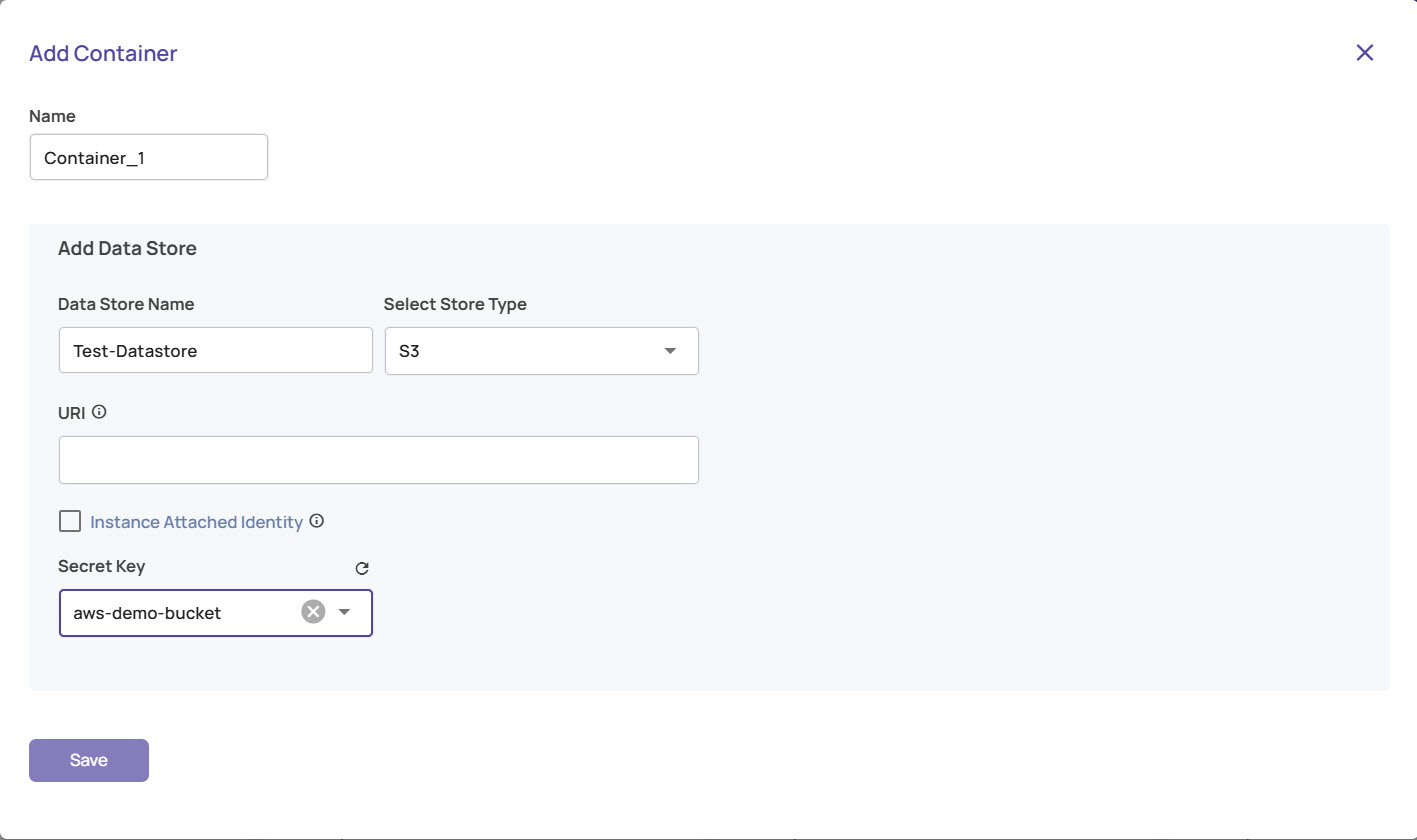

AWS S3

- URI: Enter URI in the format s3a://<bucket-name>/<optional-directory-name> - A bucket or any sub-directory within the bucket(at any level in the hierarchy) can be assigned as URI. Only files within directories under the URI will be allowed for featurization and registration with Data Explorer.

- Secret Key: Credentials stored as a secret of AWS type on the Secrets page.

- Instance Attached Identity: The machine where adectl is installed is the machine where data will be featurized and registered with Data Explorer. This operation needs access to the S3 bucket. This access can be configured using AWS-Instance-attached-role and if configured this way, the 'Instance Attached Identity' box must be checked to inform Data explorer to use the instance attached role. When 'Instance Attached Identity' is checked, the Secret is optional. The web portal will use the secret to show full-resolution images if the secret is provided. If the secret is not provided, then a thumbnail will be used in place of full resolution image. Thus the admin has complete control over whether the web portal has access to the data store.

- Region: AWS region where the S3 bucket is located(e.g. us-east-1)

Local mode

In local mode, secrets are unavailable; hence, the AWS Access key and AWS Secret Key must be provided directly.

Azure Hot Blob Store

- URI: Enter URI in the format wasbs://<storage-container-name>@<storage-account-URL>/<sub-directory>

- A sample URI is wasbs://akridemoedge@storagebuckets.blob.core.windows.net/

- The sub-directory is optional. If a sub-directory is provided, then only objects within this sub-directory are accessible to Data Explorer.

- Secret Key: Credentials stored as a secret of Azure type on the Secrets page.

Local mode

In local mode, secrets are unavailable, so the Storage Account Key must be provided directly.

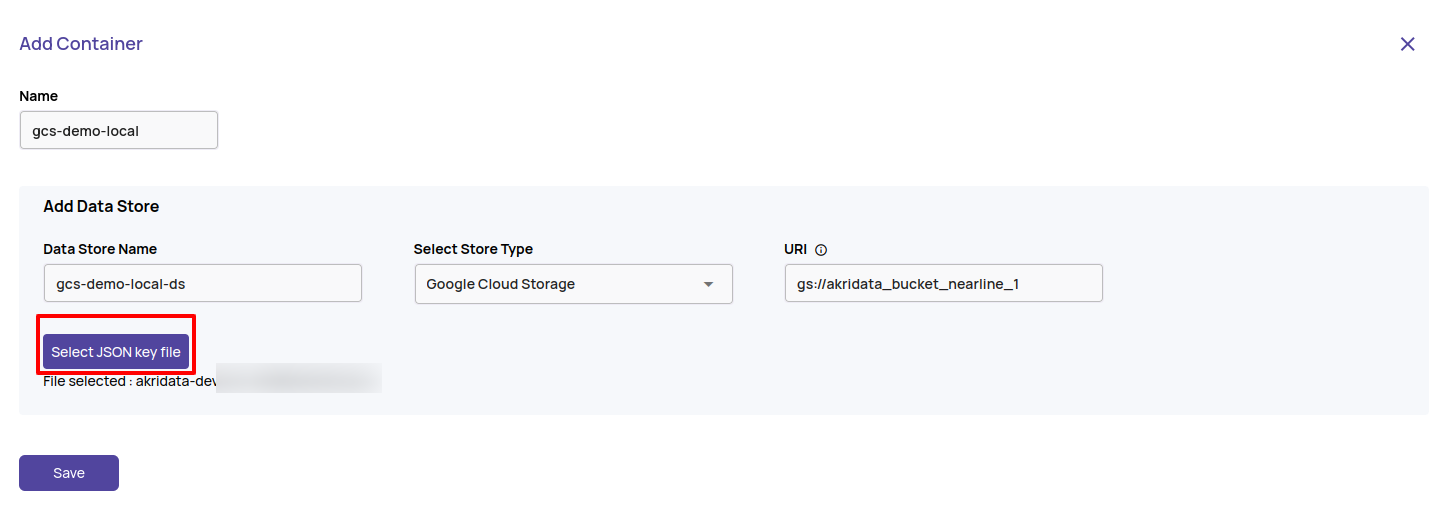

Google Cloud Storage

- URI: Enter URI in the format gsutil URI form gs://<bucket-name>/<sub-directory>

- A sample URI is gs://akridata_bucket_nearline_1.

- The sub-directory is optional. If a sub-directory is provided, then only objects within this sub-directory are accessible to Data Explorer.

- Secret Key: Credentials stored as a secret of GCP type on the Secrets page.

Local mode

In local mode, secrets are unavailable, so the Service Account Key JSON must be provided directly.